10 AI Skills You MUST Learn in 2025

Complete Guide for Advanced Programmers

🚀 Introduction: The AI Revolution in 2025

As we navigate through 2025, the AI landscape has evolved dramatically, creating unprecedented opportunities and challenges for advanced programmers. The rapid advancement of Large Language Models (LLMs), the democratization of AI tools, and the emergence of new paradigms like AI agents and multimodal systems have fundamentally transformed what it means to be an AI practitioner.

This comprehensive guide identifies the 10 most critical AI skills that advanced programmers must master to stay competitive and innovative in the rapidly evolving AI ecosystem. These skills represent the intersection of cutting-edge research, practical implementation, and real-world applications that are shaping the future of technology.

💡 Why These Skills Matter:

• Industry demand for AI expertise has increased by 300% since 2023

• 85% of Fortune 500 companies are actively implementing AI solutions

• Average AI specialist salary has grown by 40% in the last 18 months

• New AI job roles are emerging faster than traditional tech positions

1️⃣ Large Language Models (LLMs) Mastery

Large Language Models (LLMs) have emerged as the backbone of modern AI systems, enabling breakthroughs in natural language processing, generative AI, and multimodal interaction. From automated customer support to code generation, LLMs are powering a new wave of intelligent applications.

As the AI landscape evolves rapidly, mastering LLMs has become a core competency for developers, data scientists, and architects involved in building intelligent, scalable, and adaptive systems.

🔹 Core LLM Concepts

Architecture Understanding: At the heart of Large Language Models lies the Transformer architecture, which revolutionized natural language processing through its innovative attention mechanism. The self-attention mechanism allows models to weigh the importance of different words in a sequence, enabling them to capture complex relationships and dependencies. Understanding tokenization strategies is crucial, as it determines how text is broken down into manageable units that the model can process. Modern LLMs use sophisticated vocabulary management systems that balance vocabulary size with coverage, while positional encoding ensures that the model understands the order of tokens in a sequence. The context window limitations present both technical challenges and opportunities for optimization, as models must efficiently process increasingly large amounts of text while maintaining performance.

Training Paradigms: The training of Large Language Models follows a sophisticated multi-stage process that begins with pre-training on massive text corpora. During this phase, models learn fundamental language patterns, grammar, and world knowledge through tasks like masked language modeling and next sentence prediction. Fine-tuning then adapts these pre-trained models to specific domains or tasks, while instruction tuning teaches models to follow human instructions more effectively. Reinforcement Learning from Human Feedback (RLHF) represents a breakthrough in aligning model outputs with human preferences, using human feedback to guide model behavior. Parameter-efficient fine-tuning techniques like LoRA and QLoRA enable adaptation of large models with minimal computational resources, making them accessible to a broader range of developers and organizations.

🔹 Practical Implementation

Model Integration: Integrating Large Language Models into production systems requires careful consideration of API design, performance optimization, and cost management. Modern LLM APIs from providers like OpenAI, Anthropic, and open-source alternatives offer standardized interfaces for model interaction, but each comes with unique characteristics and limitations. Prompt engineering has emerged as a critical skill, involving the art of crafting inputs that elicit desired responses from models. This includes techniques like few-shot learning, chain-of-thought prompting, and role-based instruction. Context management becomes increasingly important as applications grow in complexity, requiring sophisticated conversation handling that maintains coherence across multiple interactions while managing token limits and costs.

Advanced Techniques: Retrieval-Augmented Generation (RAG) represents a powerful paradigm that combines the generative capabilities of LLMs with external knowledge sources. This approach enables models to provide more accurate and up-to-date information by retrieving relevant documents or data before generating responses. Function calling and tool use integration allow LLMs to interact with external systems and APIs, transforming them from pure text generators into intelligent agents capable of performing actions. Multi-modal LLM applications extend beyond text to handle images, audio, and video, opening new possibilities for AI applications. Custom model fine-tuning pipelines enable organizations to create specialized models tailored to their specific domains and use cases, though this requires careful consideration of data quality, computational resources, and ethical implications.

🎯 Key Takeaway: LLMs are not just tools—they're platforms for building intelligent applications. Mastery involves understanding both the underlying architecture and practical implementation strategies.

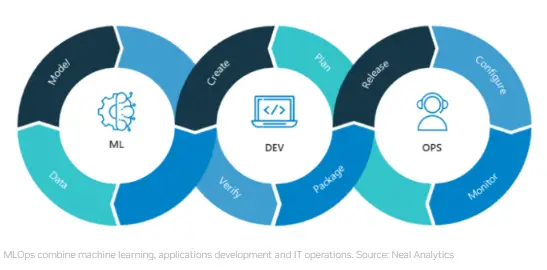

2️⃣ MLOps and AI Infrastructure

MLOps has evolved from a niche practice to a critical discipline that bridges the gap between AI research and production deployment. Advanced programmers must understand how to build scalable, reliable, and maintainable AI systems that can handle the complexities of real-world AI applications.

🔹 Infrastructure Components

Model Lifecycle Management: Effective MLOps begins with comprehensive model lifecycle management that spans from initial development to production deployment and ongoing maintenance. This encompasses sophisticated model versioning systems that track not just code changes, but also data versions, hyperparameters, and model artifacts. Experiment tracking platforms like MLflow and Weights & Biases enable reproducible research by automatically logging all aspects of model training, including metrics, parameters, and artifacts. Model registries serve as centralized repositories for model storage, versioning, and deployment, while automated deployment pipelines ensure consistent and reliable model releases. A/B testing frameworks allow for systematic comparison of model performance in production environments, enabling data-driven decisions about model updates and improvements.

Scalable Infrastructure: Building scalable AI infrastructure requires deep understanding of distributed computing principles and cloud-native technologies. Kubernetes orchestration has become the de facto standard for managing AI workloads, providing automatic scaling, load balancing, and fault tolerance. GPU and TPU cluster management involves optimizing resource allocation, implementing efficient scheduling algorithms, and ensuring high utilization rates. Distributed training systems enable training of large models across multiple nodes, requiring expertise in data parallelism, model parallelism, and pipeline parallelism techniques. Auto-scaling mechanisms dynamically adjust computational resources based on demand, optimizing costs while maintaining performance.

🔹 Production Best Practices

Monitoring and Observability: Production AI systems require comprehensive monitoring that goes beyond traditional application monitoring to include model-specific metrics and business impact measurements. Model performance monitoring tracks key metrics like accuracy, latency, and throughput, while drift detection systems identify when model performance degrades due to data distribution changes. Infrastructure health monitoring ensures optimal resource utilization and identifies potential bottlenecks before they impact system performance. Business metrics tracking connects model performance to real-world outcomes, enabling organizations to measure the actual impact of AI systems on their objectives. Alert systems provide early warning of issues, while incident response procedures ensure rapid resolution of problems.

Security and Compliance: AI systems in production must adhere to strict security and compliance requirements that protect sensitive data and ensure regulatory compliance. Data privacy measures include encryption at rest and in transit, access controls, and data anonymization techniques. Model security involves protecting against adversarial attacks, ensuring model integrity, and implementing secure model serving mechanisms. Access control and authentication systems manage who can access, modify, or deploy models, while audit trails provide comprehensive logging of all system activities. Compliance reporting ensures adherence to regulations like GDPR, HIPAA, and industry-specific requirements, while secure API protection prevents unauthorized access to model endpoints.

3️⃣ AI Agents and Autonomous Systems

AI agents represent the next evolution of AI systems, capable of autonomous decision-making and task execution. Understanding how to design, implement, and deploy intelligent agents is crucial for advanced programmers who want to build systems that can operate independently and adapt to changing environments.

🔹 Agent Architecture

Core Components: The architecture of AI agents encompasses several fundamental components that work together to create intelligent, autonomous systems. Planning and reasoning engines form the cognitive core of agents, enabling them to analyze situations, generate plans, and make decisions based on available information and goals. These engines often incorporate techniques from classical AI planning, reinforcement learning, and symbolic reasoning to handle complex decision-making scenarios.

Memory systems and knowledge representation mechanisms allow agents to maintain context, learn from experience, and build persistent knowledge bases. This includes both short-term working memory for immediate task execution and long-term memory for storing learned patterns, facts, and strategies. Tool integration and API orchestration capabilities enable agents to interact with external systems, databases, and services, expanding their capabilities beyond pure reasoning to include real-world actions and data access.

Multi-Agent Systems: When multiple agents work together, sophisticated coordination mechanisms become essential for effective collaboration. Agent communication protocols define how agents exchange information, coordinate actions, and resolve conflicts. These protocols range from simple message passing to complex negotiation and consensus-building mechanisms that enable agents to work toward shared objectives while respecting individual constraints and capabilities.

Coordination and collaboration mechanisms ensure that agents can work together efficiently without conflicts or redundant actions. This includes task allocation strategies, resource sharing protocols, and conflict resolution procedures. Scalable agent architectures support systems with hundreds or thousands of agents, requiring efficient communication patterns, distributed decision-making algorithms, and robust fault tolerance mechanisms.

🔹 Implementation Strategies

Development Frameworks: Modern AI agent development leverages specialized frameworks and libraries that provide the building blocks for creating sophisticated autonomous systems. LangChain, AutoGen, and similar frameworks offer high-level abstractions for agent development, including memory management, tool integration, and conversation handling. These frameworks significantly reduce the complexity of building agents while providing flexibility for customization and extension.

Custom agent development patterns emerge as applications become more specialized and complex. These patterns include hierarchical agent architectures, where high-level agents coordinate lower-level specialists, and emergent behavior systems, where complex behaviors arise from simple agent interactions. Tool integration and function calling capabilities allow agents to interact with external APIs, databases, and services, enabling them to perform real-world tasks and access current information.

Safety and Control: As AI agents become more autonomous and powerful, ensuring their safety and maintaining human oversight becomes increasingly critical. Agent safety mechanisms and constraints prevent agents from taking harmful actions or operating outside their intended scope. These mechanisms include action validation, goal alignment checks, and emergency shutdown procedures that can be triggered when agents behave unexpectedly.

Human oversight and intervention systems provide mechanisms for human operators to monitor agent behavior, override decisions when necessary, and provide guidance or corrections. Ethical decision-making frameworks ensure that agents make choices that align with human values and ethical principles, while risk assessment and mitigation strategies identify potential dangers and implement safeguards to prevent harm.

4️⃣ Multimodal AI Systems

Multimodal AI systems that can process and understand text, images, audio, and video are becoming increasingly important. Advanced programmers need to understand how to build systems that can handle multiple data types seamlessly, enabling more natural and comprehensive AI interactions.

🔹 Data Processing

Input Processing: Multimodal AI systems must handle diverse data types, each requiring specialized preprocessing and feature extraction techniques. Image preprocessing involves normalization, augmentation, and feature extraction using convolutional neural networks or vision transformers. Audio signal processing includes noise reduction, feature extraction using spectrograms or mel-frequency cepstral coefficients, and temporal modeling to capture sequential patterns in audio data.

Video frame extraction and temporal modeling present unique challenges as they combine spatial and temporal dimensions. Systems must efficiently extract key frames, model temporal relationships between frames, and handle the computational complexity of processing video sequences. Cross-modal alignment and synchronization ensure that different data modalities are properly aligned in time and space, enabling the system to understand relationships between different types of information.

Model Integration: Vision-language models (VLMs) represent a breakthrough in multimodal AI, enabling systems to understand relationships between visual and textual information. These models can perform tasks like image captioning, visual question answering, and cross-modal retrieval. Audio-visual learning systems extend this capability to include audio data, enabling applications like lip reading, audio-visual speech recognition, and multimedia content analysis.

Cross-modal transformers and attention mechanisms allow models to focus on relevant parts of different modalities when making decisions. Multimodal fusion strategies combine information from multiple modalities to create comprehensive representations that capture the richness of multimodal data. These strategies range from simple concatenation to sophisticated attention-based fusion mechanisms that dynamically weight different modalities based on their relevance to the task.

🔹 Applications and Use Cases

Real-world Applications: Multimodal AI systems are transforming numerous industries and applications. Content generation and editing tools can create videos, images, and text based on multimodal inputs, enabling new forms of creative expression and content production. Autonomous vehicles and robotics rely heavily on multimodal perception to understand their environment, combining camera feeds, lidar data, radar information, and other sensors to make safe navigation decisions.

Healthcare diagnostics and analysis benefit from multimodal AI systems that can analyze medical images, patient records, and sensor data to provide comprehensive diagnostic insights. Creative AI and entertainment applications use multimodal systems to create immersive experiences, generate interactive content, and develop new forms of digital art and media.

Technical Challenges: Building effective multimodal AI systems presents several significant technical challenges. Computational efficiency and optimization are critical as processing multiple modalities simultaneously requires substantial computational resources. Data quality and annotation strategies become more complex when dealing with multiple data types, requiring sophisticated labeling approaches and quality assurance procedures.

Model interpretability and explainability are particularly important in multimodal systems, as understanding how different modalities contribute to decisions is crucial for building trust and ensuring reliability. Ethical considerations and bias mitigation become more complex when dealing with multiple data types, requiring careful attention to potential biases in each modality and their interactions.

5️⃣ AI Ethics and Responsible AI

As AI systems become more powerful and pervasive, understanding AI ethics and responsible AI practices is not just a moral imperative—it's a technical necessity. Advanced programmers must be able to identify, assess, and mitigate ethical risks in AI systems to ensure they benefit society while minimizing potential harms.

🔹 Ethical Frameworks

Core Principles: Responsible AI development is grounded in fundamental ethical principles that guide the design, implementation, and deployment of AI systems. Fairness, accountability, and transparency form the foundation of ethical AI, ensuring that systems treat all individuals equitably, can be held responsible for their decisions, and operate in ways that are understandable to users and stakeholders. Privacy protection and data governance ensure that personal information is handled responsibly and in accordance with legal and ethical standards.

Safety and security considerations address the potential risks that AI systems might pose to individuals, organizations, and society. This includes protecting against malicious use, ensuring system reliability, and preventing unintended consequences. Human oversight and control mechanisms ensure that humans remain ultimately responsible for AI system behavior and can intervene when necessary to prevent harm or correct errors.

Bias Detection and Mitigation: Algorithmic bias represents one of the most significant ethical challenges in AI development, as systems can inadvertently perpetuate or amplify existing societal biases. Bias identification involves systematic analysis of model behavior across different demographic groups, identifying patterns of unfair treatment or discrimination. Fairness metrics and evaluation frameworks provide quantitative measures of bias, enabling developers to assess and compare the fairness of different models and approaches.

Bias mitigation techniques range from data preprocessing approaches that balance training data to algorithmic modifications that explicitly optimize for fairness. Continuous monitoring and improvement processes ensure that bias detection and mitigation remain ongoing priorities throughout the AI system lifecycle, adapting to changing societal norms and emerging ethical concerns.

🔹 Implementation Practices

Technical Solutions: Implementing ethical AI requires technical solutions that embed ethical considerations into the AI development process. Explainable AI (XAI) techniques provide insights into how AI systems make decisions, enabling users to understand and trust system behavior. These techniques include model interpretability methods, feature importance analysis, and decision explanation generation that can communicate complex model reasoning in understandable terms.

Privacy-preserving machine learning techniques enable AI systems to learn from sensitive data while protecting individual privacy. Secure multi-party computation allows multiple parties to collaborate on AI model training without sharing raw data, while differential privacy provides mathematical guarantees about privacy protection. These techniques are essential for building AI systems that can operate on sensitive data while maintaining user trust and regulatory compliance.

Governance and Compliance: Effective AI governance requires comprehensive frameworks that guide ethical decision-making throughout the AI development lifecycle. AI governance frameworks establish policies, procedures, and oversight mechanisms that ensure ethical considerations are integrated into all aspects of AI development and deployment. Regulatory compliance strategies ensure that AI systems meet legal requirements in different jurisdictions, adapting to evolving regulations and standards.

Audit trails and documentation provide transparency into AI system development and operation, enabling external review and accountability. Stakeholder engagement and communication ensure that diverse perspectives are considered in AI development, including input from affected communities, domain experts, and ethicists. This collaborative approach helps identify potential ethical issues early and ensures that AI systems serve the broader public interest.

6️⃣ Edge AI and IoT Integration

Edge AI brings intelligence closer to data sources, enabling real-time processing and decision-making. Advanced programmers need to understand how to deploy and optimize AI models for resource-constrained environments while maintaining performance and reliability.

🔹 Edge Computing

Model Optimization: Deploying AI models on edge devices requires sophisticated optimization techniques that balance performance with resource constraints. Model compression and quantization reduce model size and computational requirements while maintaining acceptable accuracy levels. Techniques like pruning remove unnecessary connections from neural networks, while quantization reduces precision of weights and activations, significantly reducing memory and computational requirements.

Neural architecture search (NAS) automates the design of efficient neural network architectures specifically optimized for edge deployment. Knowledge distillation techniques transfer knowledge from large, accurate models to smaller, more efficient models suitable for edge devices. Hardware-specific optimizations leverage the unique characteristics of edge hardware, including specialized accelerators, memory hierarchies, and power management features.

Deployment Strategies: Effective edge AI deployment requires sophisticated orchestration and management strategies that handle the distributed nature of edge computing environments. Edge device management and orchestration systems coordinate the deployment, monitoring, and maintenance of AI models across potentially thousands of edge devices. These systems handle device heterogeneity, network connectivity issues, and dynamic resource constraints.

Distributed inference and federated learning enable collaborative AI processing across multiple edge devices, improving performance and enabling learning from distributed data sources. Edge-cloud collaboration patterns optimize the division of computational tasks between edge devices and cloud resources, balancing latency requirements with computational capabilities. Real-time data processing pipelines ensure that edge AI systems can handle streaming data efficiently, making decisions based on the most current information available.

🔹 IoT Integration

Sensor Data Processing: IoT devices generate vast amounts of sensor data that require sophisticated processing and analysis capabilities. Time-series analysis and forecasting techniques enable edge AI systems to identify patterns in sensor data and predict future values, supporting applications like predictive maintenance and demand forecasting. Anomaly detection systems identify unusual patterns or events in sensor data, enabling early warning of equipment failures or security threats.

Sensor fusion and data integration combine information from multiple sensors to create comprehensive understanding of the environment. Real-time decision-making systems process sensor data and make immediate decisions without requiring cloud connectivity, enabling autonomous operation in environments with limited or unreliable network access.

System Architecture: Building effective edge AI systems requires careful architectural design that addresses the unique challenges of distributed, resource-constrained environments. Edge gateway design and implementation provide the interface between edge devices and cloud systems, handling data preprocessing, local storage, and communication management. Data flow optimization and caching strategies minimize network traffic and improve system responsiveness by storing frequently accessed data locally.

Security and privacy protection are critical in edge AI systems, as they often process sensitive data in potentially insecure environments. Scalability and reliability considerations ensure that edge AI systems can handle increasing numbers of devices and maintain operation despite individual device failures or network issues.

7️⃣ Quantum Machine Learning

Quantum computing is poised to revolutionize AI by solving problems that are currently intractable for classical computers. Advanced programmers should understand the fundamentals of quantum machine learning and its potential applications, even as the field continues to evolve rapidly.

🔹 Quantum Fundamentals

Quantum Computing Basics: Quantum computing represents a fundamental shift in computational paradigms, leveraging quantum mechanical phenomena to process information in ways that classical computers cannot. Qubits, the quantum equivalent of classical bits, can exist in superposition states, representing multiple values simultaneously. This superposition enables quantum computers to explore vast solution spaces efficiently, potentially solving problems that would take classical computers millions of years.

Quantum gates and circuits manipulate qubits to perform computations, with quantum gates operating on superposition states to create complex quantum algorithms. Quantum entanglement and teleportation enable correlations between qubits that have no classical equivalent, providing new mechanisms for information processing and communication. Quantum algorithms and complexity theory provide frameworks for understanding the capabilities and limitations of quantum computing, helping developers identify problems that might benefit from quantum approaches.

Quantum ML Algorithms: Quantum machine learning algorithms leverage quantum computing capabilities to solve machine learning problems more efficiently than classical approaches. Quantum neural networks use quantum circuits to implement neural network-like structures, potentially enabling more powerful learning capabilities. Quantum support vector machines can solve classification problems more efficiently for certain types of data, while quantum clustering and classification algorithms can identify patterns in high-dimensional data more effectively.

Quantum optimization algorithms, such as the Quantum Approximate Optimization Algorithm (QAOA), can solve complex optimization problems that arise in machine learning, including feature selection, hyperparameter optimization, and model training. These algorithms represent the cutting edge of quantum machine learning research, with ongoing development of new approaches and applications.

🔹 Practical Applications

Current Capabilities: While quantum computing is still in its early stages, several application areas are showing promising results. Quantum chemistry and materials science benefit from quantum computers' natural ability to simulate quantum systems, enabling more accurate modeling of molecular interactions and material properties. Financial modeling and optimization can leverage quantum algorithms to solve complex portfolio optimization, risk assessment, and trading strategy problems.

Cryptography and security applications use quantum computing to develop new cryptographic protocols and to assess the security of existing systems against quantum attacks. Drug discovery and molecular simulation represent one of the most promising applications, as quantum computers can simulate molecular interactions with unprecedented accuracy, potentially accelerating the development of new pharmaceuticals and materials.

Development Tools: The quantum computing ecosystem includes several development frameworks and tools that enable programmers to experiment with quantum machine learning. Qiskit, Cirq, and other quantum frameworks provide high-level abstractions for quantum programming, making it accessible to developers with classical computing backgrounds. Hybrid quantum-classical algorithms combine quantum and classical computing resources, enabling practical applications even with current quantum hardware limitations.

Quantum error correction and mitigation techniques address the inherent noise and decoherence in quantum systems, improving the reliability of quantum computations. Quantum advantage demonstration projects aim to identify specific problems where quantum computers can outperform classical systems, providing benchmarks for quantum computing progress and guiding future development efforts.

8️⃣ Federated Learning and Privacy

Federated learning enables collaborative model training without sharing raw data, addressing privacy concerns while leveraging distributed data sources. This skill is essential for building privacy-preserving AI systems that can learn from sensitive data while maintaining user privacy and regulatory compliance.

🔹 Federated Learning

Core Concepts: Federated learning represents a paradigm shift in machine learning, enabling model training across distributed data sources without requiring data centralization. Horizontal federated learning involves training on data with the same features but different samples, while vertical federated learning works with data that has the same samples but different features. This distinction is crucial for designing effective federated learning systems that can handle different data distribution scenarios.

Federated averaging and aggregation techniques combine model updates from multiple participants without sharing raw data. These techniques must handle the challenges of heterogeneous data distributions, varying computational capabilities, and network connectivity issues. Communication efficiency and compression strategies minimize the bandwidth required for federated learning, making it practical for deployment in resource-constrained environments.

Implementation Challenges: Building effective federated learning systems requires addressing several significant technical challenges. System heterogeneity and stragglers occur when participants have different computational capabilities or network conditions, potentially slowing down the overall training process. Privacy guarantees and security measures must ensure that model updates do not leak sensitive information about individual participants' data.

Model convergence and performance in federated settings can be challenging due to data heterogeneity and limited communication. Incentive mechanisms and coordination strategies encourage participation and ensure fair contribution from all participants, addressing the free-rider problem and ensuring sustainable federated learning ecosystems.

🔹 Privacy Technologies

Privacy-Preserving Techniques: Federated learning is complemented by a range of privacy-preserving techniques that provide additional layers of protection for sensitive data. Differential privacy implementation adds carefully calibrated noise to model updates or training data, providing mathematical guarantees about privacy protection. Homomorphic encryption enables computation on encrypted data, allowing model training without ever decrypting sensitive information.

Secure multi-party computation enables collaborative computation where no party learns anything beyond the final result, providing strong privacy guarantees for federated learning scenarios. Zero-knowledge proofs allow participants to prove that they have performed certain computations correctly without revealing the underlying data or intermediate results.

Practical Considerations: Implementing privacy-preserving federated learning requires careful consideration of practical constraints and trade-offs. Performance overhead and optimization strategies address the computational and communication costs of privacy-preserving techniques, ensuring that privacy protection does not make systems impractical for real-world deployment. Regulatory compliance and auditing requirements vary across jurisdictions, requiring systems that can adapt to different legal frameworks and provide necessary documentation.

User consent and transparency mechanisms ensure that participants understand how their data is being used and can make informed decisions about participation. Risk assessment and mitigation strategies identify potential privacy risks and implement appropriate safeguards, including regular security audits and incident response procedures.

9️⃣ AI for Software Development

AI is transforming software development itself, from code generation to testing and deployment. Advanced programmers need to understand how to leverage AI to improve their development workflows and create better software more efficiently.

🔹 AI-Powered Development

Code Generation and Assistance: AI-powered code generation tools are revolutionizing how developers write, review, and maintain code. AI code completion and generation systems can suggest entire functions, classes, or modules based on context and requirements, significantly accelerating development speed. These tools learn from vast codebases to understand patterns, best practices, and common implementations, providing suggestions that align with established coding standards.

Code review and quality analysis tools use AI to automatically identify potential bugs, security vulnerabilities, and code quality issues. These systems can analyze code complexity, suggest refactoring opportunities, and ensure adherence to coding standards and best practices. Documentation generation and maintenance tools automatically create and update documentation based on code changes, ensuring that documentation remains current and comprehensive.

Testing and Quality Assurance: AI is transforming software testing by automating the generation of test cases and identifying potential issues before they reach production. Automated test case generation creates comprehensive test suites that cover edge cases and error conditions that might be missed by manual testing. Bug prediction and prevention systems analyze code patterns and historical data to identify areas likely to contain bugs, enabling proactive quality improvement.

Performance analysis and optimization tools use AI to identify performance bottlenecks and suggest improvements. Security vulnerability detection systems automatically scan code for common security issues, providing early warning of potential threats and suggesting remediation strategies.

🔹 Development Workflows

AI-Enhanced Tools: Modern development environments are increasingly incorporating AI capabilities that enhance developer productivity and code quality. GitHub Copilot and similar tools provide intelligent code suggestions based on context, comments, and existing code patterns. AI-powered IDEs and editors offer features like intelligent autocomplete, code refactoring suggestions, and automated error correction.

Automated deployment and CI/CD pipelines use AI to optimize deployment strategies, predict potential deployment issues, and automatically rollback problematic deployments. Monitoring and observability systems leverage AI to identify performance issues, predict system failures, and provide intelligent alerting that reduces false positives and helps developers focus on real problems.

Best Practices: Successfully integrating AI into software development requires careful consideration of best practices and potential pitfalls. Human-AI collaboration patterns ensure that AI tools enhance rather than replace human judgment, maintaining the creativity and problem-solving abilities that humans bring to software development. Code quality and maintainability remain paramount, with AI tools serving to enhance rather than compromise these essential characteristics.

Security and privacy considerations are particularly important when using AI tools that may have access to sensitive code or data. Continuous learning and improvement processes ensure that AI tools evolve with changing development practices and technology trends, maintaining their relevance and effectiveness over time.

🔟 AI Strategy and Business Impact

Understanding how AI creates business value and drives strategic decisions is crucial for advanced programmers. This skill bridges the gap between technical implementation and business outcomes, enabling developers to create AI solutions that deliver measurable business impact.

🔹 AI Strategy Development

Business Alignment: Effective AI strategy begins with deep understanding of business objectives and identification of opportunities where AI can create significant value. AI opportunity identification and prioritization involves systematic analysis of business processes, customer needs, and competitive landscape to identify high-impact AI applications. This process requires collaboration between technical teams and business stakeholders to ensure that AI initiatives align with strategic objectives.

ROI analysis and business case development provide the foundation for AI investment decisions, quantifying the potential benefits and costs of AI initiatives. Risk assessment and mitigation strategies identify potential challenges and develop contingency plans, ensuring that AI projects can proceed with confidence. Stakeholder alignment and communication ensure that all relevant parties understand the AI strategy and their roles in its implementation.

Implementation Planning: Successful AI strategy execution requires comprehensive planning that addresses technical, organizational, and operational considerations. Technology roadmap and architecture planning ensure that AI systems can scale with business growth and integrate with existing infrastructure. Team building and skill development create the human capital necessary for AI success, including both technical expertise and business acumen.

Change management and adoption strategies address the organizational challenges of AI implementation, ensuring that new AI systems are embraced by users and integrated into existing workflows. Performance measurement and optimization frameworks provide ongoing feedback on AI system effectiveness and guide continuous improvement efforts.

🔹 Value Creation

Business Applications: AI creates value across diverse business applications, from customer-facing systems to internal operations. Customer experience and personalization use AI to create more engaging, relevant, and effective customer interactions, driving increased satisfaction and loyalty. Operational efficiency and automation streamline business processes, reducing costs and improving productivity while enabling human workers to focus on higher-value activities.

Product innovation and development leverage AI to accelerate research and development, identify new product opportunities, and optimize product design and manufacturing processes. Competitive advantage and differentiation arise from AI capabilities that are difficult for competitors to replicate, creating sustainable competitive moats in increasingly AI-driven markets.

Success Metrics: Measuring AI success requires comprehensive metrics that capture both technical performance and business impact. Key performance indicators (KPIs) track specific aspects of AI system performance, from accuracy and speed to user satisfaction and business outcomes. Business impact measurement connects AI performance to tangible business results, including revenue growth, cost reduction, and customer satisfaction improvements.

Continuous improvement and optimization processes ensure that AI systems evolve with changing business needs and technological capabilities. Long-term value creation strategies focus on building AI capabilities that provide sustained competitive advantage and business growth over extended periods.

🎯 Learning Roadmap for 2025

To master these 10 AI skills effectively, follow this structured learning roadmap designed for advanced programmers:

🔹 Phase 1: Foundation (Months 1-3)

1. Core Skills

- Deep dive into LLMs and transformer architecture

- MLOps fundamentals and infrastructure setup

- AI ethics and responsible AI practices

- Basic quantum computing concepts

2. Practical Projects

- Build a custom LLM application

- Set up a complete MLOps pipeline

- Implement bias detection in ML models

- Create a simple quantum algorithm

🔹 Phase 2: Advanced Implementation (Months 4-6)

1. Specialized Skills

- AI agents and autonomous systems

- Multimodal AI and edge computing

- Federated learning and privacy-preserving AI

- AI-powered software development tools

2. Real-world Applications

- Deploy AI agents in production environments

- Build multimodal applications

- Implement federated learning systems

- Integrate AI into development workflows

🔹 Phase 3: Mastery and Innovation (Months 7-12)

1. Advanced Topics

- Quantum machine learning applications

- Cutting-edge research and emerging trends

- AI strategy and business impact

- Innovation and thought leadership

2. Leadership and Impact

- Lead AI initiatives and teams

- Contribute to open-source AI projects

- Share knowledge through teaching and writing

- Drive AI strategy and innovation

🚀 Resources and Tools

To accelerate your learning journey, here are the essential resources and tools for each skill area:

🔹 Learning Resources

1. Online Courses and Platforms

- Coursera: Deep Learning Specialization, AI for Everyone

- edX: MIT Introduction to Deep Learning

- Fast.ai: Practical Deep Learning for Coders

- Stanford CS224N: Natural Language Processing

2. Books and Publications

- "Attention Is All You Need" - Transformer paper

- "Hands-On Machine Learning" - Aurélien Géron

- "Designing Data-Intensive Applications" - Martin Kleppmann

- "AI Superpowers" - Kai-Fu Lee

🔹 Development Tools

1. Frameworks and Libraries

- PyTorch, TensorFlow, and JAX for deep learning

- Hugging Face Transformers for LLMs

- MLflow and Weights & Biases for MLOps

- Qiskit and Cirq for quantum computing

2. Platforms and Services

- AWS SageMaker and Azure ML for cloud AI

- Google Cloud AI Platform and Vertex AI

- Databricks for data engineering and ML

- NVIDIA NGC for GPU-optimized containers

💡 Conclusion: The Future of AI Programming

The AI landscape in 2025 represents both unprecedented opportunities and significant challenges for advanced programmers. The 10 skills outlined in this guide form a comprehensive foundation for navigating this rapidly evolving field.

Success in AI programming requires more than technical expertise—it demands a holistic understanding of how AI systems interact with society, business, and technology. By mastering these skills, you'll be well-positioned to lead innovation, drive business value, and shape the future of AI.

🎯 Key Success Factors:

• Continuous learning and adaptation to new technologies

• Strong foundation in both theory and practical implementation

• Understanding of ethical implications and responsible AI practices

• Ability to bridge technical and business perspectives

• Collaboration and knowledge sharing within the AI community

Remember that AI is not just about building models—it's about creating systems that solve real problems, create value, and improve human lives. As you develop these skills, focus on applications that make a meaningful impact and contribute to the responsible development of AI technology.

The future belongs to those who can harness the power of AI while understanding its limitations and implications. Start your journey today, and you'll be at the forefront of the AI revolution in 2025 and beyond.