Comprehensive Guide to Kafka Production Support

Introduction: Navigating the World of Kafka Production Support

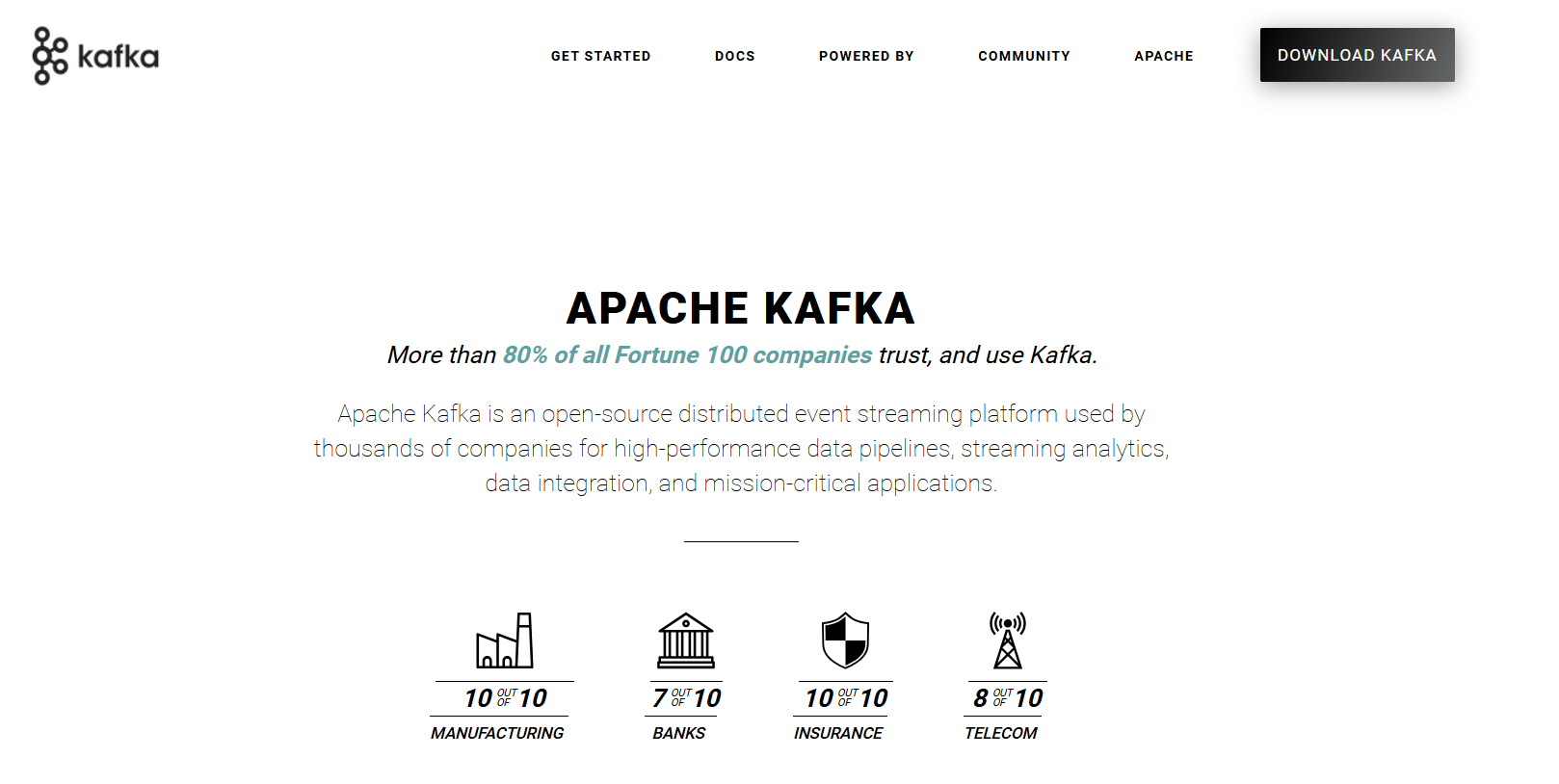

Apache Kafka has emerged as a cornerstone technology in modern data architectures, functioning as a high-throughput, distributed streaming platform. It enables organizations to build real-time data pipelines and streaming applications capable of handling trillions of events per day. Its critical role in processing vast amounts of data for analytics, event-driven microservices, and log aggregation makes its stability and performance paramount.

This guide aims to provide a comprehensive overview of Kafka production support, meticulously covering the technical, operational, and process-related aspects essential for maintaining a healthy and efficient Kafka ecosystem. Whether you are a beginner Kafka administrator taking your first steps, an experienced DevOps professional looking to deepen your expertise, or part of a Platform/SRE team responsible for large-scale deployments, this document will equip you with the knowledge and best practices to navigate the complexities of Kafka in production.

We will delve into key areas including understanding your Kafka environment, cluster management and operations, robust monitoring and alerting strategies, performance tuning techniques, troubleshooting common issues, ensuring high availability and disaster recovery, supporting ecosystem components like Kafka Connect and Kafka Streams, considerations for running Kafka on Kubernetes, and establishing effective support processes.

Robust production support is not merely about fixing problems as they arise; it's about proactively managing the environment to ensure Kafka's reliability, optimal performance, and continuous availability. This proactive stance is crucial for businesses that depend on Kafka for their critical data flows and real-time decision-making capabilities.

Understanding Your Kafka Environment: The Foundation for Effective Support

A solid grasp of Kafka's architecture and its components is fundamental for effective production support. While a deep dive into every nuance of Kafka is beyond this section's scope, we will focus on the concepts essential for diagnosing and maintaining a production system.

Core Kafka Architecture Overview

Apache Kafka is inherently a distributed system. This means it's designed to run as a cluster of one or more servers (called brokers) that can span multiple data centers or cloud regions. This distributed nature provides scalability, fault tolerance, and high availability. Data in Kafka is organized into topics, which are further divided into partitions. Partitions allow for parallel processing and data distribution across brokers.

Key Components Deep Dive (from a support perspective)

Brokers

Brokers are the heart of a Kafka cluster. Each broker is a server that stores data in partitions, handles client requests (produce and fetch), and participates in data replication. A crucial role within the broker cluster is that of the controller. One broker is elected as the controller and is responsible for managing the state of partitions and replicas and for performing administrative tasks like reassigning partitions. For troubleshooting, broker logs are invaluable. Specifically, the controller.log provides insights into controller activities and leadership changes, while the state-change.log records state transitions of resources like topics and partitions, which is useful for diagnosing issues like offline partitions (Confluent Platform Docs: Post-Deployment).

ZooKeeper (Legacy) / KRaft (Modern)

ZooKeeper: In traditional Kafka deployments (versions prior to widespread KRaft adoption), Apache ZooKeeper played a critical role. It was responsible for storing cluster metadata (like broker configuration, topic configurations, ACLs), electing the controller broker, and coordinating brokers (e.g., notifying brokers of changes in the cluster). Common ZooKeeper issues in a Kafka context include connectivity problems between brokers and ZooKeeper, performance bottlenecks in the ZooKeeper ensemble, and session expirations, all of which can destabilize the Kafka cluster.

KRaft (Kafka Raft Metadata mode): KRaft is Kafka's built-in consensus protocol designed to replace ZooKeeper, simplifying Kafka's architecture and improving scalability and operational manageability. In KRaft mode, a subset of brokers, designated as controllers, manage Kafka's metadata using a Raft-based consensus algorithm. This eliminates the external dependency on ZooKeeper. Key configurations for KRaft mode include process.roles (defining if a node is a broker, controller, or both – though "both" is not recommended for production), node.id (a unique identifier for each node), and controller.quorum.voters (listing the controller nodes participating in the metadata quorum) (Confluent KRaft Docs: Configuration). Running Kafka in KRaft mode is a best practice for new deployments (Instaclustr: Kafka Best Practices).

Topics and Partitions

Topics are logical channels or categories to which records are published. Each topic is divided into one or more partitions. Partitions are the fundamental unit of parallelism in Kafka; data within a partition is ordered and immutable. Each message in a partition is assigned a sequential ID number called an offset. Partitions allow a topic's data to be distributed across multiple brokers, enabling horizontal scaling for both storage and processing. The number of partitions directly impacts consumer parallelism, as a consumer group can have at most as many active consumers as there are partitions for a topic.

Producers

Producers are client applications that write (publish) streams of records to Kafka topics. From a support perspective, key producer configurations significantly affect reliability. The acks (acknowledgments) setting controls the number of brokers that must acknowledge receipt of a message before the producer considers the write successful (e.g., acks=all provides the strongest guarantee). The retries setting determines how many times a producer will attempt to resend a message upon a transient failure (Dev.to: Mitigating Message Loss).

Consumers & Consumer Groups

Consumers are client applications that read (subscribe to) streams of records from Kafka topics. Consumers typically operate as part of a consumer group. Each consumer in a group reads messages from exclusive partitions of a topic. Kafka ensures that each partition is consumed by exactly one consumer within a group at any given time. Offset management is crucial: consumers commit the offsets of messages they have processed, allowing them to resume from where they left off in case of failure or restart. Rebalancing is the process by which partitions are reassigned among consumers in a group when a new consumer joins or an existing one leaves or fails.

Data Flow

At a high level, the data flow in Kafka is as follows:

- Producers send records (key-value pairs) to specific topics. The producer can choose a specific partition or let Kafka decide based on the message key or a round-robin strategy.

- The Kafka broker that is the leader for the target partition receives the record, writes it to its local log, and replicates it to follower brokers (depending on the topic's replication factor and producer's

ackssetting). - Once the write is acknowledged (based on the

acksconfiguration), the producer considers the message sent. - Consumers subscribe to one or more topics. Kafka delivers messages from the partitions of those topics to the consumers.

- Consumers process the messages and periodically commit the offsets of the messages they have successfully processed back to Kafka.

Key Takeaways: Understanding Your Kafka Environment

- Kafka is a distributed system with brokers as core servers.

- Brokers store data, handle requests, and one acts as a controller. Logs like

controller.logandstate-change.logare vital for troubleshooting. - KRaft mode is replacing ZooKeeper for metadata management, simplifying architecture. Key KRaft configs include

process.rolesandcontroller.quorum.voters. - Topics are divided into partitions, enabling parallelism and data distribution.

- Producers write data;

acksandretriessettings are critical for reliability. - Consumers read data in groups, manage offsets, and undergo rebalancing.

Kafka Cluster Management and Operations

Effective Kafka production support hinges on robust cluster management and operational practices. This section covers the day-to-day and strategic activities required to maintain a healthy, performant, and scalable Kafka cluster.

Hardware and OS Considerations for Production

Choosing appropriate hardware and tuning the operating system are foundational for a stable Kafka deployment.

- CPU: Modern multi-core processors are recommended. Kafka can utilize multiple cores for network threads, I/O threads, and stream processing.

- RAM: Sufficient RAM is crucial. Kafka uses RAM for JVM heap and heavily relies on the OS page cache for performance. A common recommendation is to allocate around 6 GB of RAM for heap space for brokers, but for heavy production loads, machines with 32 GB or more are preferable (Inder-DevOps Medium: Kafka Best Practices). The remaining RAM should be available for the OS page cache.

- Disk: Solid State Drives (SSDs) are highly recommended for their low latency and high throughput, which significantly benefit Kafka's disk-intensive operations. Using multiple drives can maximize throughput. It's critical not to share the same drives used for Kafka data with application logs or other OS filesystem activity to avoid contention (Confluent Docs: Running Kafka in Production).

- Network: A high-bandwidth, low-latency network (10GbE or higher) is essential, especially for clusters with high throughput or many replicas.

- OS Tuning:

- File Descriptors: Kafka brokers can open a large number of files (for log segments and network connections). The operating system's limit on open file descriptors (Ulimit) must be raised significantly. A common issue leading to outages is running out of file descriptors (Instaclustr: 12 Kafka Best Practices).

- Swappiness: Reduce swappiness (e.g., set

vm.swappiness=1) to prevent the OS from swapping out Kafka process memory, which can severely degrade performance. - Filesystem: XFS or ext4 are commonly used filesystems.

Configuration Management

Proper configuration is key to Kafka's stability and performance.

- Broker Configurations (

server.properties): This file contains numerous settings for brokers. Some configurations are static (require a broker restart to take effect), while others can be updated dynamically without a restart. Tools and APIs provided by Kafka (and Confluent Platform) allow for dynamic updates of certain broker configurations (Confluent Docs: Dynamic Broker Configuration). Key settings include listener configurations, log directories, thread pool sizes, and replication parameters. - Topic Configurations:

Topics can have their own specific configurations that override broker defaults.

- Replication Factor: Controls how many copies of each message are stored. A common production setting is a replication factor of 3, allowing for the failure of two brokers without data loss (Apache Kafka Documentation). Instaclustr also recommends a replication factor greater than 2 (typically 3) (Instaclustr: 12 Kafka Best Practices).

- Partition Count: Determines the level of parallelism. The number of partitions impacts throughput and consumer scaling.

- Retention Policies (

retention.ms,retention.bytes): Define how long messages are kept before being deleted or compacted. - Cleanup Policies (

cleanup.policy): Can bedelete(default, messages are deleted after retention period) orcompact(keeps at least the latest value for each message key, useful for changelogs).

- ZooKeeper/KRaft Configuration:

For ZooKeeper-based clusters, ensure the ZooKeeper ensemble is correctly configured for quorum and performance. For KRaft, critical settings include

process.roles,node.id,controller.quorum.voters, and listener configurations for the metadata quorum.

Routine Maintenance Tasks

- Log Management (Kafka's own logs):

Kafka brokers produce several important logs:

server.log: Main broker operational log.controller.log: Logs activities of the elected controller broker.state-change.log: Records all state changes managed by the controller.

INFO. For debugging, it can be dynamically changed toDEBUG, though this should be done cautiously in production due to increased log volume (Confluent Docs: Post-Deployment). Implement log rotation and ensure sufficient disk space for these logs. - Disk Space Management: Continuously monitor disk usage for data logs and application logs. Proactively manage disk space by adjusting retention policies or adding more storage.

- Regular Health Checks: Implement automated scripts or perform manual checks to verify cluster status, broker health, ISR counts, and consumer lag.

- Security Patch Management: Regularly apply security patches to the underlying OS and Kafka components to mitigate vulnerabilities.

Upgrades and Patching

Upgrading a Kafka cluster requires careful planning and execution.

- Planning for Upgrades:

- Check compatibility between broker versions, client versions, and the inter-broker protocol version (

inter.broker.protocol.version). - Review release notes for any breaking changes or specific upgrade instructions.

- Check compatibility between broker versions, client versions, and the inter-broker protocol version (

- Rolling Restart Procedures: To maintain availability during upgrades or configuration changes that require restarts, perform a rolling restart. This involves restarting one broker at a time, allowing the cluster to remain operational. Ensure partitions have leaders on other brokers before restarting one (Confluent Docs: Post-Deployment, Aiven Docs: Upgrade Procedure).

- Handling Configuration Changes During Upgrades: Some configuration changes might be necessary as part of an upgrade. Apply these systematically during the rolling restart.

- Rollback Strategies: True rollback of a Kafka version upgrade can be complex and risky. The primary strategy is to ensure a smooth forward upgrade. If issues occur, it's often about fixing forward or restoring from backups (if a DR scenario is triggered). Some platforms might offer limited rollback capabilities for plan changes rather than version upgrades (Aiven Docs: Rollback Options).

Scaling the Cluster

Adding/Removing Brokers

- Graceful Addition of New Brokers: When adding a new broker, it initially has no data. Partitions need to be reassigned to it to balance the load.

- Graceful Removal of Brokers: This is a more involved process. Data from the broker(s) being removed must be migrated to other brokers in the cluster. This involves partition reassignment. Tools like

kafka-remove-brokers(part of Confluent Platform) or features like Self-Balancing Clusters can automate much of this process (Confluent Docs: Self-Balancing Clusters). AWS MSK also provides procedures for safe broker removal (AWS MSK Blog: Safely Remove Brokers). The broker should be shut down gracefully (controlled.shutdown.enable=true). - Impact on Cluster Balance and Performance: Adding or removing brokers temporarily impacts performance due to data movement. The goal is to achieve a balanced cluster afterward.

Partition Reassignment

- Scenarios: Required when adding brokers, to balance load across existing brokers, moving data off a failing disk, or changing replication factors.

- Tool: The

kafka-reassign-partitions.shscript is used to generate a reassignment plan and execute it. - Best Practices:

- Perform reassignments during off-peak hours if possible.

- Throttle the reassignment bandwidth (

--throttleoption) to minimize impact on production traffic. - Monitor the progress using the

--verifyoption.

- Rebalancing Triggers and Effects: Consumer group rebalancing is different from partition reassignment (which is about data placement on brokers). Consumer rebalancing is triggered by consumers joining/leaving a group or by session timeouts. It can temporarily halt message consumption for the affected group (Redpanda: Kafka Rebalancing, meshIQ: Handling Rebalancing Issues).

Security Best Practices for Production Kafka

Securing a Kafka cluster is paramount to protect sensitive data.

- Authentication: Verify the identity of clients and brokers.

- Client-Broker & Broker-Broker: SASL (Simple Authentication and Security Layer) is a common framework. Supported mechanisms include PLAIN, SCRAM (Salted Challenge Response Authentication Mechanism), GSSAPI (Kerberos), and OAUTHBEARER. Mutual TLS (mTLS) can also be used for authentication. (Confluent Blog: Secure Kafka Deployment).

- ZooKeeper/KRaft Communication: Communication between brokers and ZooKeeper (or between KRaft controllers/brokers) should also be authenticated (e.g., SASL or mTLS for ZooKeeper, mTLS for KRaft).

- Authorization: Control what authenticated principals can do.

- ACLs (Access Control Lists): Kafka provides native ACLs managed via the

kafka-acls.shscript. ACLs define permissions (Read, Write, Create, etc.) on resources (Topics, Groups, Cluster). - Role-Based Access Control (RBAC): Confluent Platform offers RBAC, which simplifies managing permissions for large numbers of users and resources by assigning roles. (Confluent Blog: Secure Kafka Deployment).

- ACLs (Access Control Lists): Kafka provides native ACLs managed via the

- Encryption: Protect data in transit.

- SSL/TLS should be configured for all communication paths: client-broker, inter-broker, broker-ZooKeeper/KRaft, and for tools. This encrypts messages and authentication credentials on the network.

- Network Security:

- Use firewalls to restrict access to Kafka brokers and ZooKeeper/KRaft nodes to only necessary clients and servers.

- Employ network segmentation to isolate the Kafka cluster.

- Secure Kafka listeners by configuring them appropriately (e.g., using separate listeners for internal and external traffic with different security protocols).

- Securing ZooKeeper/KRaft Metadata: Ensure that access to ZooKeeper data or KRaft metadata logs is restricted and authenticated/authorized, as this metadata is critical to cluster operation.

- Regular Security Audits and Updates: Periodically review security configurations, audit access logs, and apply security updates to Kafka and its dependencies.

Key Takeaways: Cluster Management and Operations

- Hardware/OS: Use SSDs, ample RAM (for heap and page cache), sufficient CPU, and tune OS (file descriptors, swappiness).

- Configuration: Manage broker (static/dynamic) and topic (replication, partitions, retention) settings carefully. KRaft requires specific quorum configurations.

- Maintenance: Monitor Kafka's own logs (server, controller, state-change), manage disk space, perform health checks, and apply security patches.

- Upgrades: Plan carefully, use rolling restarts for availability, and understand compatibility.

- Scaling: Gracefully add/remove brokers with data migration. Use

kafka-reassign-partitions.shfor balancing, throttling to minimize impact. - Security: Implement authentication (SASL/mTLS), authorization (ACLs/RBAC), encryption (SSL/TLS), and network security.

Kafka Monitoring and Alerting: Your Eyes and Ears

Proactive and comprehensive monitoring is not just a best practice; it's a necessity for maintaining a healthy Kafka production environment. Effective monitoring allows for early detection of issues, facilitates performance optimization, aids in capacity planning, and ultimately ensures the integrity and availability of your data streams (Middleware.io: Kafka Monitoring Guide).

Why Monitoring is Crucial in Production

- Early Issue Detection: Identify problems like broker failures, high consumer lag, or resource exhaustion before they escalate into major outages.

- Performance Optimization: Understand bottlenecks and areas for improvement in producers, consumers, or brokers.

- Capacity Planning: Track resource utilization trends (disk, CPU, network) to forecast future needs and scale proactively.

- Ensuring Data Integrity and Availability: Monitor replication status (under-replicated partitions) and end-to-end data flow to guarantee data is not lost and is accessible.

Key Metrics Categories and What They Indicate

Monitoring a wide array of metrics across different components provides a holistic view of the cluster's health.Broker Metrics

- OS Level:

- CPU Utilization: High CPU can indicate overloaded brokers.

- Memory Usage (Heap and Off-Heap/Page Cache): Crucial for performance; Kafka relies heavily on the OS page cache.

- Disk I/O & Disk Space: Kafka is disk-intensive. Monitor for full disks or I/O bottlenecks.

- Network I/O: Tracks data flowing in and out of brokers.

- JVM Metrics:

- Heap Usage: Monitor for potential memory leaks or insufficient heap allocation.

- Garbage Collection (GC) Activity (Count, Time): Frequent or long GC pauses can severely impact performance.

- Thread Count: Ensure sufficient threads for various operations.

- Kafka Specific (JMX MBeans examples):

kafka.server:type=BrokerTopicMetrics,name=MessagesInPerSec: Rate of messages received by the broker.kafka.server:type=BrokerTopicMetrics,name=BytesInPerSec/BytesOutPerSec: Data throughput, indicating load.kafka.network:type=RequestMetrics,name=RequestsPerSec,request={Produce|FetchConsumer|FetchFollower}: Rate of specific request types.kafka.network:type=RequestMetrics,name=TotalTimeMs,request={Produce|FetchConsumer}: Latency of produce and fetch requests, critical for performance.kafka.server:type=ReplicaManager,name=UnderReplicatedPartitions: Number of partitions with fewer than the desired number of in-sync replicas. This should ideally be zero. Critical for HA.kafka.server:type=ReplicaManager,name=IsrShrinksPerSec/IsrExpandsPerSec: Rate at which In-Sync Replica (ISR) sets shrink or expand. High rates can indicate network issues or struggling replicas.kafka.controller:type=KafkaController,name=ActiveControllerCount: Should always be 1 in a healthy cluster.kafka.controller:type=KafkaController,name=OfflinePartitionsCount: Number of partitions without an active leader. This should ideally be zero.- Log flush rate, segment count: Indicate disk writing activity and log management.

Producer Metrics

record-send-rate: Number of records sent per second.record-error-rate: Rate of errors encountered while sending records.request-latency-avg: Average time for produce requests.batch-size-avg: Average number of bytes in a batch.compression-rate-avg: Average compression ratio.- Monitoring these helps identify issues with message production and delivery efficiency (Middleware.io: Producer Metrics).

Consumer Metrics

records-lag-max(Consumer Lag): The maximum difference in offsets between the last message produced to a partition and the last message consumed from that partition by a consumer group. This is one of the most critical consumer metrics, indicating data freshness and processing delays.fetch-rate: Rate at which consumers fetch messages.bytes-consumed-rate: Data consumption throughput.commit-latency-avg: Average time for consumers to commit offsets.rebalance_latency_avg/max,failed_rebalances_total: Metrics related to consumer group rebalances. Frequent or long rebalances disrupt consumption. (Note: specific metric names might vary with rebalancing protocol, e.g., cooperative).- Monitoring these helps ensure consumers are processing messages promptly and efficiently (Middleware.io: Consumer Metrics).

Topic/Partition Metrics

- Per-partition log size: Helps in understanding data distribution and storage growth.

- Message count per partition (can be estimated).

- Under-replicated status per partition: Granular view of replication health.

ZooKeeper Metrics (if applicable)

zk_avg_latency: Average latency of ZooKeeper requests.zk_outstanding_requests: Number of pending requests. High numbers indicate ZK is struggling.zk_num_alive_connections: Number of active client connections to ZooKeeper.- Monitoring ZooKeeper is crucial as its failure can lead to Kafka cluster failures (Middleware.io: ZooKeeper Metrics).

KRaft Metrics (if applicable)

- Quorum health: Status of the KRaft controller quorum.

- Leader status: Which controller is the active leader.

- Commit latency for the metadata log (

__cluster_metadatatopic). - Controller-specific metrics: Some controller metrics in KRaft mode may differ from ZooKeeper-based controller metrics due to the architectural change (Aiven Docs: Transitioning to KRaft). Key metrics include those related to the metadata log replication and processing.

Monitoring Tools and Techniques

- JMX (Java Management Extensions): Kafka exposes a wealth of metrics via JMX by default.

- Tools to access JMX: JConsole, VisualVM (both part of JDK), and Kafka's own

kafka.tools.JmxTool. - Key MBeans exist for brokers, producers, and consumers, providing granular insights (Confluent Docs: Monitoring with JMX, Medium: Exposing Kafka Metrics via JMX). Examples include

kafka.server:*,kafka.producer:*,kafka.consumer:*.

- Tools to access JMX: JConsole, VisualVM (both part of JDK), and Kafka's own

- Prometheus and Grafana: A very popular open-source combination for metrics collection, storage, and visualization. This typically requires a JMX exporter (e.g.,

jmx_prometheus_javaagentor Confluent's JMX Metric Reporter) to scrape JMX metrics from Kafka and expose them in a Prometheus-compatible format. - Confluent Control Center / Confluent Cloud Monitoring: For users of Confluent Platform or Confluent Cloud, these tools offer comprehensive, out-of-the-box monitoring dashboards, alert management, and operational insights.

- Commercial APM/Observability Tools: Solutions like Datadog, New Relic, Dynatrace, Middleware.io, and Acceldata provide Kafka monitoring capabilities, often with auto-discovery, pre-built dashboards, and advanced analytics.

- Log Aggregation: Tools like the ELK Stack (Elasticsearch, Logstash, Kibana), Splunk, or Grafana Loki are essential for centralizing, searching, and analyzing Kafka broker, client, and ZooKeeper/KRaft logs. This is crucial for troubleshooting.

Setting Up Effective Alerting

Metrics are only useful if they drive action. Alerting is key to this.

- Define Critical Thresholds: Set alerts for key metrics crossing critical thresholds. Examples:

- Consumer Lag (

records-lag-max) > X records for Y minutes. - Disk Usage > Y% (e.g., 80%).

UnderReplicatedPartitions> 0 for more than a few minutes.OfflinePartitionsCount> 0.- High request latency (e.g., Produce/Fetch latency > Z ms).

- Low active controller count (not equal to 1).

- Consumer Lag (

- Alert on Error Rates and Anomalies: Monitor producer error rates, consumer processing errors (if logged), and significant deviations from baseline performance.

- Integrate with Incident Management Systems: Send alerts to systems like PagerDuty, Opsgenie, or Slack to ensure timely notification and response.

- Avoid Alert Fatigue: Focus on actionable alerts. Too many noisy alerts can lead to them being ignored. Alerts should signify a real or impending problem that requires attention. Tune thresholds and alert conditions regularly.

Key Takeaways: Kafka Monitoring and Alerting

- Importance: Crucial for early issue detection, performance optimization, capacity planning, and ensuring data integrity.

- Key Metric Categories: Monitor Broker (OS, JVM, Kafka-specific like UnderReplicatedPartitions, RequestLatency), Producer (send-rate, error-rate), Consumer (ConsumerLag, fetch-rate), Topic/Partition, and ZooKeeper/KRaft health.

- Tools: Utilize JMX, Prometheus/Grafana, Confluent Control Center, commercial APM tools, and log aggregation systems (ELK, Splunk).

- Alerting: Define critical thresholds for actionable alerts on metrics like consumer lag, disk usage, and under-replicated partitions. Integrate with incident management systems.

Kafka Performance Tuning and Optimization

Performance tuning in Kafka is an iterative process, heavily reliant on understanding your specific workload, monitoring key metrics, and making informed adjustments. The goal is to maximize throughput, minimize latency, and ensure efficient resource utilization without compromising reliability.

Identifying Performance Bottlenecks

Before tuning, identify the bottleneck. Is it CPU, memory (heap or page cache), disk I/O, or network bandwidth?

- Metrics Analysis: Use the metrics discussed in the previous section. High CPU utilization on brokers might point to insufficient network or I/O threads, or heavy SSL/compression overhead. High disk I/O wait times suggest disk bottlenecks. High network saturation indicates network limitations.

- Latency Analysis: Analyze request latencies (e.g.,

ProduceRequestTotalTimeMs,FetchConsumerRequestTotalTimeMs). Break down latency into components like queue time, local time, remote time, and throttle time to pinpoint delays.

Producer Tuning

Key producer configurations impact throughput, latency, and durability:acks:acks=0: Producer doesn't wait for acknowledgment. Lowest latency, but highest risk of message loss.acks=1: Producer waits for acknowledgment from the leader replica only. Balances latency and durability. Message loss can occur if leader fails before followers replicate.acks=all(or-1): Producer waits for acknowledgment from all in-sync replicas (ISR). Highest durability, but higher latency. This is generally recommended for critical data.

batch.size: Controls the maximum size of a batch of records the producer will group together before sending. Larger batches generally improve compression and throughput (fewer requests) but increase end-to-end latency for individual messages. Default is 16KB.linger.ms: The producer will wait up to this amount of time to allow more records to be batched together before sending, even ifbatch.sizeisn't reached. A value greater than 0 can increase throughput at the cost of slight additional latency. Default is 0.compression.type: (none,gzip,snappy,lz4,zstd). Compressing data reduces network bandwidth usage and disk storage on brokers, but adds CPU overhead on both producer and consumer. Snappy and LZ4 offer a good balance of compression ratio and CPU cost. Zstd often provides better compression at comparable CPU.buffer.memory: Total memory (in bytes) the producer can use to buffer records waiting to be sent to the server. If records are sent faster than they can be delivered to the server, this buffer will be exhausted. Default is 32MB.max.block.ms: Controls how long thesend()call will block ifbuffer.memoryis full or metadata is unavailable.- Retries (

retries,retry.backoff.ms): Number of times the producer will retry sending a message on transient errors.retry.backoff.msis the time to wait before retrying. - Idempotence (

enable.idempotence=true): Ensures that retries do not result in duplicate messages in the log, providing exactly-once semantics per partition for the producer. Requiresacks=all,retries > 0, andmax.in.flight.requests.per.connection <= 5.

Consumer Tuning

Key consumer configurations affect processing speed, resource usage, and message delivery guarantees:fetch.min.bytes: The minimum amount of data the server should return for a fetch request. If insufficient data is available, the request will wait up tofetch.max.wait.ms. Increasing this can reduce broker load by processing fewer, larger fetch requests, improving throughput. Default is 1 byte.fetch.max.wait.ms: Maximum time the server will block before answering a fetch request iffetch.min.bytesis not met. Default is 500ms.max.poll.records: Maximum number of records returned in a single call topoll(). Default is 500. Adjust based on how fast your consumer can process records.auto.offset.reset: What to do when there is no initial offset in Kafka or if the current offset does not exist anymore on the server (e.g., because data has been deleted):latest: Automatically reset the offset to the latest offset.earliest: Automatically reset the offset to the earliest offset.none: Throw exception to the consumer if no previous offset is found for the consumer's group.

enable.auto.commitvs. Manual Commit:enable.auto.commit=true: Offsets are committed automatically at a frequency controlled byauto.commit.interval.ms. Simpler, but can lead to message loss if consumer crashes after commit but before processing, or message duplication if processing happens after commit but consumer crashes before next auto-commit.- Manual Commit (

enable.auto.commit=false): Consumer explicitly commits offsets (synchronously or asynchronously). Provides more control and better delivery guarantees (at-least-once, or can be used to build exactly-once with careful state management).

- Consumer Parallelism: The number of consumer instances in a consumer group should ideally match the number of partitions for the consumed topic(s) for maximum parallelism. If consumers are slow, increasing partitions and consumer instances can help, provided the processing logic itself is not the bottleneck.

- Processing Logic Efficiency: The most significant factor for consumer performance is often the application code that processes the messages. Optimize this code to be as fast and non-blocking as possible.

Broker Tuning

Key broker configurations (inserver.properties) influence overall cluster performance:

num.network.threads: Number of threads the broker uses for receiving requests from the network and sending responses. Typically, set to the number of CPU cores. Default is 3. (meshIQ: Top 10 Tuning Tips)num.io.threads: Number of threads the broker uses for processing requests, including disk I/O. Typically, set to at least the number of disks or more (e.g., 2x cores). Default is 8. (meshIQ: Top 10 Tuning Tips)num.replica.fetchers: Number of threads used for replicating messages from leader partitions on other brokers. Increase if followers are lagging. Default is 1.- JVM Heap Size (

KAFKA_HEAP_OPTS): Set via environment variable (e.g.,-Xmx6g -Xms6g). Balance between too small (frequent GC) and too large (long GC pauses). 6-8GB is a common starting point. Monitor GC activity closely. - Page Cache: Kafka relies heavily on the OS page cache for read/write performance. Ensure ample free memory is available for the page cache.

- Log Flush Settings (

log.flush.interval.messages,log.flush.interval.ms): Control how frequently data is flushed from page cache to disk. Kafka's durability comes from replication, so aggressive flushing is often not needed and can hurt performance. Rely on OS background flushes. Defaults are usually fine. message.max.bytes: Maximum size of a message the broker will accept. Must be coordinated with producer (max.request.size) and consumer (fetch.message.max.bytes) settings. Default is ~1MB.replica.lag.time.max.ms: If a follower replica has not caught up to the leader's log end offset for this amount of time, it is removed from the ISR. Default is 30000ms (30 seconds).

Topic and Partition Strategy

- Number of Partitions: A critical tuning parameter. More partitions allow for greater parallelism but also increase overhead (more open files, more leader elections, increased metadata). Too many partitions can lead to higher end-to-end latency and increased load on the controller (meshIQ: Adjust Partition Count, Instaclustr: Going overboard with partitions). The optimal number depends on throughput targets, consumer processing speed, and desired parallelism.

- Replication Factor: Typically 3 for production environments to balance durability and resource cost.

- Partitioning Keys: If message ordering for a certain key is required, producers should send messages with that key. Kafka guarantees that all messages with the same key go to the same partition. A good key distribution is important to avoid data skew (some partitions much larger/busier than others).

Network Optimization

- Ensure sufficient network bandwidth between clients and brokers, and between brokers themselves for replication traffic.

- Minimize network latency. Co-locating clients and brokers in the same data center/availability zone is ideal. Cross-AZ or cross-region traffic will incur higher latency.

- Tune TCP buffer sizes (

socket.send.buffer.bytes,socket.receive.buffer.bytes) on brokers and clients if default OS settings are suboptimal for high-throughput workloads.

Operating System Tuning

- File Descriptor Limits: As mentioned before, increase Ulimits significantly.

- Filesystem Choice: XFS or ext4 are common. XFS is often favored for its performance with large files.

- Swappiness: Set

vm.swappinessto a low value (e.g., 1) to discourage swapping. - Ensure sufficient virtual memory map counts (

vm.max_map_count).

Key Takeaways: Kafka Performance Tuning

- Iterative Process: Tuning is based on monitoring and workload specifics.

- Producers: Balance

acks(durability vs. latency),batch.size&linger.ms(throughput vs. latency), and usecompression.typewisely. Enable idempotence for critical data. - Consumers: Adjust

fetch.min.bytes&fetch.max.wait.ms, manage offset commits carefully (manual vs. auto), and ensure consumer processing logic is efficient. Parallelism is key. - Brokers: Tune

num.network.threads,num.io.threads, JVM heap, and rely on page cache. Ensuremessage.max.bytesandreplica.lag.time.max.msare appropriate. - Topics/Partitions: Choose partition count carefully to balance parallelism and overhead. Use partitioning keys for ordering and distribution.

- Network/OS: Ensure adequate bandwidth, low latency, and tune OS parameters like file descriptors and swappiness.

Troubleshooting Common Kafka Issues

Despite careful planning and tuning, issues can arise in a Kafka production environment. A systematic approach to troubleshooting is essential for quick resolution.

General Troubleshooting Approach

- Check Logs First: This is often the most direct way to find error messages or clues. Examine:

- Broker logs (

server.log,controller.log,state-change.log). - Client application logs (producer and consumer).

- ZooKeeper logs (if applicable) or KRaft controller logs.

- Broker logs (

- Correlate with Metrics: Review monitoring dashboards. What metrics changed around the time the issue started? Look for spikes in latency, error rates, resource utilization, or drops in throughput.

- Use Kafka Command-Line Tools: These tools are invaluable for diagnosis:

kafka-topics.sh --describe --topic <topic_name>: Shows partition leaders, replicas, ISRs.kafka-consumer-groups.sh --describe --group <group_name>: Shows consumer lag per partition.kafka-broker-api-versions.sh: Checks broker connectivity and supported API versions.kafka-log-dirs.sh --describe: Shows log sizes on brokers.

- Isolate the Problem:

- Is the issue affecting all topics/clients or only specific ones?

- Is it localized to a particular broker or availability zone?

- Did it start after a recent change (deployment, configuration update, scaling event)?

Common Problems and Solutions

Message Loss

This is one of the most critical issues. Message loss can occur at various points in the data pipeline.

- Causes:

- Producer:

acksset to0or1: Withacks=0, the producer doesn't wait for any acknowledgment. Withacks=1, if the leader broker fails after acknowledging but before followers replicate, data can be lost.- Insufficient retries for transient errors.

- Non-idempotent producers might resend messages after certain failures, leading to duplicates if not handled, but actual loss is more about under-acknowledgment.

- Broker:

- Unclean leader election (if

unclean.leader.election.enable=true, which is generally not recommended for data safety). This allows an out-of-sync replica to become leader, potentially losing messages acknowledged by the old leader but not replicated. - Insufficient

min.insync.replicas: If set too low (e.g., 1 for a replication factor of 3), acks can be sent before sufficient replication, and subsequent failures can lead to loss. For RF=3,min.insync.replicas=2is common. - Disk failures on multiple brokers holding all replicas of a partition before data is fully replicated and acknowledged according to

acks=all.

- Unclean leader election (if

- Consumer:

- Auto-commit enabled (

enable.auto.commit=true) and consumer crashes after committing offsets but before fully processing the messages. - Errors in manual offset commit logic (e.g., committing too early).

- Auto-commit enabled (

- Producer:

- Troubleshooting & Mitigation:

- Verify producer

ackssetting (useallfor critical data). - Ensure producers have retries configured and consider enabling idempotence.

- Set

unclean.leader.election.enable=falseon brokers. - Configure

min.insync.replicasappropriately (e.g., for RF=N, set to N-1, typically 2 for RF=3). - For consumers, prefer manual offset commits and commit only after messages are fully processed. Consider transactional processing for stronger guarantees.

- Monitor

UnderReplicatedPartitionsandOfflinePartitionsCountclosely.

- Verify producer

High Consumer Lag

Consumer lag indicates that consumers are not keeping up with the rate of message production.

- Causes:

- Slow consumer processing logic (inefficient code, blocking calls, external dependencies).

- Insufficient consumer parallelism (fewer active consumer instances than partitions, or some consumers are significantly slower than others).

- Network bottlenecks between consumers and brokers.

- Broker performance issues (high load, disk I/O limits).

- Sudden bursts of messages overwhelming consumers.

- Uneven partition load (data skew) due to poor partitioning strategy.

- Diagnosis:

- Monitor

records-lag-maxper consumer group and partition. - Check consumer application logs for errors, exceptions, or indications of slow processing steps.

- Analyze CPU/memory/network utilization on consumer instances.

- Examine partition message distribution using tools or broker metrics.

- Monitor

- Resolution:

- Optimize consumer application code: improve algorithms, make I/O non-blocking, batch process where possible.

- Increase consumer instances up to the number of partitions.

- If partitions are a bottleneck and ordering allows, consider increasing the number of partitions for the topic (and then consumer instances).

- Address any broker-side performance issues.

- Improve partitioning strategy to ensure more even data distribution if skew is detected.

- Tune consumer fetch parameters (

fetch.min.bytes,max.poll.records).

Broker Failures / Unavailability

- Diagnosis:

- Check broker logs (

server.log,controller.log) for error messages like OutOfMemoryError (OOM), disk full errors, network exceptions, or issues connecting to ZooKeeper/KRaft. - Monitor controller logs for leader election activity. If a broker fails, the controller will elect new leaders for its partitions.

- Check ISR status for partitions previously led by the failed broker using

kafka-topics.sh --describe. - Use OS-level tools (

dmesg,top,iostat,netstat) on the affected broker machine.

- Check broker logs (

- Resolution:

- Identify and address the underlying cause (e.g., add disk space, increase heap, fix network configuration).

- Restart the broker. If it was an intentional shutdown, ensure

controlled.shutdown.enable=truewas set on the broker to allow graceful leadership transfer and log syncing (Confluent Docs: Post-Deployment). - If the broker cannot be recovered quickly, ensure its partitions have successfully failed over to other replicas. You might need to reassign partitions off the dead broker if it's permanently lost.

Performance Degradation (Slow Producers/Consumers)

- Diagnosis:

- Analyze latency metrics: producer

request-latency-avg, consumer fetch latency, end-to-end message latency. - Check resource utilization (CPU, memory, disk I/O, network) on brokers, producers, and consumers.

- Look for network issues: packet loss, high retransmits, saturation.

- Examine broker logs for frequent GC pauses or other warnings.

- Analyze latency metrics: producer

- Resolution:

- Apply tuning techniques from the "Performance Tuning" section (e.g., adjust batch sizes, compression, thread counts, consumer fetch parameters).

- Identify and fix inefficient code in producer or consumer applications.

- Scale brokers or clients if resource-constrained.

Connectivity Issues (Producers, Consumers, Inter-Broker)

- Diagnosis:

- Check network ACLs, firewalls, security groups – are the necessary ports open between relevant hosts? (e.g., 9092, 9093 for brokers, 2181 for ZK, KRaft controller ports).

- Verify DNS resolution for broker hostnames.

- Check Kafka listener configuration on brokers (

listenersandadvertised.listenersoradvertised.host.name/advertised.port). The advertised listeners must be reachable by clients. - Ensure security protocol (PLAINTEXT, SSL, SASL_SSL, SASL_PLAINTEXT) and SASL mechanism configurations match between client and server.

- Use tools like

telnet,nc, oropenssl s_clientto test basic network connectivity and SSL handshake.

- Resolution:

- Correct network/firewall rules.

- Ensure DNS is resolving correctly.

- Fix broker listener configurations. Ensure

advertised.listenerspoint to addresses that clients can resolve and connect to. - Align security configurations on clients and brokers.

ZooKeeper/KRaft Issues

- ZooKeeper (Legacy):

- Common Issues: Connectivity problems (brokers can't reach ZK), session timeouts, leader election storms in ZK, slow ZK performance.

- Diagnosis: Check ZooKeeper ensemble logs (

zookeeper.out). Check Kafka broker logs for ZooKeeper connection errors or session expiry messages. Monitor ZK metrics likezk_avg_latency,zk_outstanding_requests. (Scaler: Kafka Issues, Codemia: Kafka unable to connect to Zookeeper). - Resolution: Ensure ZK ensemble health (quorum of (N/2)+1 nodes up). Verify network stability between Kafka brokers and ZK. Ensure ZK has sufficient resources (CPU, memory, fast disk for transaction logs). Tune ZK session timeout (

zookeeper.session.timeout.mson Kafka brokers) if needed, but be cautious as too high values can delay failure detection.

- KRaft (Modern):

- Common Issues: Controller quorum loss, slow metadata propagation, issues with the metadata log (

__cluster_metadatatopic). - Diagnosis: Check KRaft controller logs and broker logs for errors related to Raft consensus, leader election, or metadata log replication. Monitor KRaft-specific metrics related to quorum health, active controller, and metadata log commit latency.

- Resolution: Ensure a healthy quorum of controller nodes (typically 3 or 5). Verify network connectivity between controllers and between controllers and brokers. Ensure controllers have adequate resources.

- Common Issues: Controller quorum loss, slow metadata propagation, issues with the metadata log (

Partition Rebalancing Storms / Frequent Rebalances (Consumers)

- Causes:

- Consumers joining or leaving the group frequently (e.g., due to flapping instances, scaling events).

- Consumer session timeouts (

session.timeout.ms): If a consumer fails to send a heartbeat within this period, it's considered dead, triggering a rebalance. - Long message processing times exceeding

max.poll.interval.ms: Ifpoll()is not called within this interval, the consumer is kicked out of the group.

- Impact: Rebalances pause message consumption for the entire group, impacting throughput and latency.

- Mitigation:

- Increase

session.timeout.msandheartbeat.interval.ms(heartbeat.interval.msshould be significantly lower thansession.timeout.ms, typically 1/3). - Increase

max.poll.interval.msif processing per batch of records is genuinely long. However, also optimize processing logic. - Use static group membership (

group.instance.id) to reduce rebalances on consumer restarts. - Consider using cooperative rebalancing (incremental rebalancing) strategy (

partition.assignment.strategyset to includeCooperativeStickyAssignor) which aims to minimize the "stop-the-world" effect of rebalances.

- Increase

Data Skew (Uneven Partition Load)

- Causes:

- Poor choice of partitioning key, leading to many messages hashing to the same few partitions.

- Insufficient number of partitions for the cardinality of keys (if keys are used).

- Producers explicitly sending to specific partitions in an unbalanced way.

- Impact: Some brokers/partitions and their corresponding consumers become hotspots (overloaded), while others are underutilized. This leads to bottlenecks and high lag for affected partitions.

- Resolution:

- Choose a partitioning key with high cardinality and good distribution.

- If not using keys, rely on Kafka's default round-robin (or sticky partitioning if

linger.ms > 0) for new producers. - Increase the number of partitions if the current number is too low for the key space or throughput needs (requires careful planning).

- Implement a custom partitioner if default strategies are insufficient.

- Monitor partition sizes and throughput to detect skew.

Out of Memory (OOM) Errors on Brokers/Clients

- Diagnosis: Check JVM logs (stdout/stderr or specific log files) for

java.lang.OutOfMemoryError. Analyze heap dumps if configured and captured. - Resolution:

- Brokers: Increase JVM heap size (

KAFKA_HEAP_OPTS) if it's too low for the workload. Check for memory leaks (less common in broker itself, but possible with plugins). Ensure enough memory for page cache. - Clients (Producers/Consumers/Streams): Increase application heap size. Optimize application code to use memory more efficiently (e.g., process messages in smaller batches, release resources, avoid holding large objects in memory). For Kafka Streams, ensure iterators over state stores are closed properly (Confluent Streams FAQ).

- Brokers: Increase JVM heap size (

Disk Full Errors on Brokers

- Diagnosis: Monitor disk usage metrics (e.g., using

df -hor monitoring tools). Check broker logs for "No space left on device" errors. - Resolution:

- Increase disk space on the affected brokers.

- Adjust log retention policies (

log.retention.ms,log.retention.bytes) to retain less data. - Ensure log cleanup (deletion or compaction) is working correctly. Check

log.segment.bytesand related settings. - If using log compaction, ensure there's enough disk space for segments to be compacted (compaction requires temporary extra space).

- Manually delete older, non-critical topic data if absolutely necessary and approved (risky).

Key Takeaways: Troubleshooting Common Kafka Issues

- Systematic Approach: Check logs, correlate with metrics, use Kafka tools, isolate the problem.

- Message Loss: Verify producer

acks,min.insync.replicas, disable unclean leader election, manage consumer commits carefully. - High Consumer Lag: Optimize consumer logic, scale consumers, address broker/network bottlenecks, fix data skew.

- Broker Failures: Check logs for root cause (OOM, disk, network), ensure controlled shutdown.

- Connectivity: Verify firewalls, DNS, listener configs, security protocols.

- ZK/KRaft Issues: Monitor quorum health, logs, and connectivity.

- Rebalancing Storms: Tune session/poll timeouts, use static membership or cooperative rebalancing.

- Resource Issues: Address OOM by tuning heap/application logic; address disk full by managing retention/adding space.

High Availability and Disaster Recovery

Ensuring high availability (HA) and robust disaster recovery (DR) capabilities are critical for production Kafka clusters that underpin essential business services. This section details Kafka's HA mechanisms and strategies for DR planning.

Understanding High Availability (HA) in Kafka

Kafka's HA is built upon its distributed nature and data replication.

- Replication Mechanism: Each partition can be replicated across multiple brokers. For each partition, one broker acts as the "leader" and handles all read and write requests for that partition. Other brokers hosting copies of the partition act as "followers." Followers passively replicate data from the leader.

- In-Sync Replicas (ISR): The ISR is a set of replicas (leader and followers) that are fully caught up with the leader's log. Only replicas in the ISR are eligible to be elected as the new leader if the current leader fails. This is crucial for data consistency.

min.insync.replicasSetting: This topic-level (or broker-level default) configuration specifies the minimum number of replicas that must be in the ISR for a producer (withacks=all) to successfully write to a partition. For example, if replication factor is 3 andmin.insync.replicasis 2, then a write must be acknowledged by the leader and at least one follower. This setting balances availability and durability. If the number of ISRs drops below this value, producers withacks=allwill receive errors.- Leader Election Process: If a leader broker for a partition fails, the controller (either ZooKeeper-based or KRaft-based) elects a new leader from the ISR set for that partition. This process should be quick to minimize unavailability. If

unclean.leader.election.enableis false (recommended), only an in-sync replica can become a leader, preventing data loss.

Disaster Recovery (DR) Planning

DR planning goes beyond single broker failures and considers larger-scale outages.

- Defining RPO and RTO:

- Recovery Point Objective (RPO): The maximum acceptable amount of data loss measured in time (e.g., RPO of 15 minutes means data loss should not exceed the last 15 minutes before the disaster).

- Recovery Time Objective (RTO): The maximum acceptable downtime for restoring the service after a disaster (e.g., RTO of 2 hours means the service must be back online within 2 hours).

- Identifying Critical Topics and Applications: Not all data may require the same level of DR. Prioritize critical topics and applications that have stringent RPO/RTO requirements.

- Considering Disaster Scenarios: Plan for various scenarios:

- Single broker failure (handled by Kafka HA).

- Rack failure.

- Availability Zone (AZ) failure.

- Full regional outage.

Kafka Disaster Recovery (DR) Strategy Selection

Choosing the right DR strategy depends on your RPO/RTO, budget, and operational capabilities. Here's a comparison of common approaches:

Note: The chart above visualizes qualitative parameters (RPO, RTO, Cost, Complexity) on a 1-5 scale for comparison. Lower scores are better for RPO/RTO, while higher scores indicate greater cost/complexity.

Detailed Recommendations:

-

Preferred Option for Typical High Availability Needs: Asynchronous Cross-Datacenter Replication

- Reasoning: This approach generally offers a good balance between cost-effectiveness, implementation complexity, and data protection for most critical business needs. It provides data redundancy in a separate geographical location.

- Key Implementation Steps:

- Plan DR network topology, ensuring sufficient bandwidth between data centers.

- Deploy and configure replication tools. Apache Kafka MirrorMaker2 is a common open-source choice. Commercial solutions like Confluent Replicator also exist. (WarpStream: DR and Multi-Region Kafka discusses MirrorMaker2).

- Define and meticulously document clear failover (activating the DR cluster) and failback (returning to the primary cluster) procedures.

- Conduct regular DR drills (e.g., quarterly) to validate RPO/RTO targets, test procedures, and train personnel.

- Prerequisites/Applicable Conditions:

- Acceptable RPO is in the range of minutes to hours.

- Adequate network bandwidth and operational capabilities are available.

- Application architecture can tolerate some latency during failover and potential data reconciliation.

-

Alternative/Advanced Option for Extreme Business Continuity: Multi-Active / Near-Synchronous Replication

- Reasoning: Offers the lowest possible data loss (near-zero RPO) and fastest recovery (minimal RTO). Suitable for mission-critical services where any downtime or data loss has severe consequences.

- Key Implementation Steps:

- Evaluate and select appropriate technology. This might involve features like Confluent Cluster Linking, vendor-specific "stretch cluster" solutions, or custom-built active-active replication mechanisms.

- Design and implement a highly robust, low-latency cross-datacenter network architecture and data synchronization mechanisms.

- Establish automated health checks and failover mechanisms to minimize human intervention during a disaster.

- Adapt applications to work in a multi-active architecture, which may involve handling potential data consistency challenges or conflict resolution if both sites can accept writes.

- Prerequisites/Applicable Conditions:

- RPO/RTO requirements are near-zero or measured in seconds.

- Significant budget and advanced technical expertise are available for implementation and maintenance.

- The business value derived from near-continuous availability significantly outweighs the high costs of this DR solution.

Backup Strategies (Complementary to Replication)

While replication is key for DR, backups can serve complementary purposes like archival or recovery from logical corruption (e.g., accidental topic deletion).

- Snapshotting Broker Disks: Involves taking periodic snapshots of the disks used by Kafka brokers. This is generally complex to implement without impacting cluster availability and restoration can be tedious. It's rarely done in practice (WarpStream: DR and Multi-Region Kafka).

- Kafka Connect for Archival: Use Kafka Connect with sink connectors (e.g., S3 connector, HDFS connector) to continuously stream data from critical topics to durable, long-term storage. This data can be used for archival, analytics, or as a last resort for point-in-time recovery if the topic data is lost from Kafka itself.

- Log Retention Policies: Kafka's own data retention policies (

log.retention.ms,log.retention.bytes) act as a form of short-term backup, allowing consumers to re-read recent data. However, this is not a DR backup strategy.

Failover and Failback Procedures

- Documented Procedures: Have clear, step-by-step, and regularly tested procedures for both failover (switching to the DR site) and failback (returning to the primary site).

- Automation vs. Manual: Decide on the level of automation for failover. Fully automated failover can reduce RTO but carries risks if triggered falsely. Manual or semi-automated failover allows for human judgment.

- Client Reconfiguration: Clients (producers and consumers) will need to be reconfigured to point to the DR cluster's brokers. This often involves DNS changes or updating client configurations.

- Data Reconciliation: After a failback, there might be a need to reconcile data produced on the DR site with the primary site, depending on the replication strategy and whether the DR site accepted writes.

Regular DR Drills and Testing

- Importance: Testing is the only way to validate that DR procedures work as expected and that RPO/RTO targets can be met. Drills also train the support team.

- Types of Drills:

- Tabletop exercises: Discussing the DR plan and walking through scenarios.

- Partial failover: Failing over a subset of applications or topics.

- Full failover: Simulating a complete site outage and failing over all critical services.

- Learning and Updating: After each drill, conduct a review, identify lessons learned, and update the DR plan and procedures accordingly.

Confluent provides guidance on multi-datacenter deployments, which often form the basis of DR strategies, covering aspects like data replication, timestamp preservation, and centralized schema management (Confluent Blog: Disaster Recovery Best Practices).

Key Takeaways: High Availability and Disaster Recovery

- HA Fundamentals: Kafka HA relies on partition replication, leader/follower model, and In-Sync Replicas (ISR).

min.insync.replicasis crucial. - DR Planning: Define RPO and RTO. Identify critical topics. Consider various disaster scenarios.

- DR Strategies:

- Asynchronous Cross-DC Replication (e.g., MirrorMaker2): Preferred for typical needs, balancing cost and protection. RPO in minutes/hours.

- Multi-Active / Near-Synchronous (e.g., Cluster Linking): For extreme business continuity, offering near-zero RPO/RTO but at high cost and complexity.

- Backup and Restore: Low cost, for non-critical systems or complementary archival.

- Backup (Complementary): Use Kafka Connect for archival to S3/HDFS. Disk snapshots are complex.

- Failover/Failback: Have documented, tested procedures. Plan for client reconfiguration.

- DR Drills: Regularly test DR plans to validate RPO/RTO and train teams.

Supporting Kafka Ecosystem Components

A production Kafka deployment often includes several ecosystem components that extend its capabilities. Supporting these components is integral to maintaining the overall health of your data streaming platform.

Kafka Connect

Kafka Connect is a framework for scalably and reliably streaming data between Apache Kafka and other systems using source (import) and sink (export) connectors.

- Deployment Models:

- Standalone Mode: Single worker process, typically for development or small tasks. Configuration is local.

- Distributed Mode: Multiple worker processes (forming a Connect cluster) coordinate to run connectors and tasks. This is the recommended mode for production due to scalability and fault tolerance. Configuration and state are stored in Kafka topics.

- Monitoring Connect Workers and Tasks:

- REST API: Kafka Connect exposes a REST API to get the status of connectors and their tasks (e.g.,

/connectors/{connector_name}/status,/connectors/{connector_name}/tasks/{task_id}/status). This is the primary way to check if a connector or task is running, failed, or paused. - Logs: Connect worker logs are crucial for diagnosing issues. They contain information about connector startup, task execution, errors, and stack traces.

- JMX Metrics: Connect workers and connectors expose JMX metrics related to throughput, errors, latency, and internal state.

- REST API: Kafka Connect exposes a REST API to get the status of connectors and their tasks (e.g.,

- Troubleshooting Common Connector Issues:

- Connector/Task Failures: If a connector or task status shows

FAILED, check the Connect worker logs for stack traces. The REST API can also provide stack traces for failed tasks (Confluent Docs: Troubleshoot Self-Managed Kafka Connect). Common causes include misconfiguration, network issues, permission problems in the external system, or bugs in the connector. - Data Type Conversion Problems / Schema Mismatches: Connectors often use converters (e.g., AvroConverter, JsonConverter) to serialize/deserialize data. Issues can arise if the data format in Kafka doesn't match what the connector expects, or if schemas are incompatible (especially when using Schema Registry).

- Performance Bottlenecks: Connectors can become bottlenecks. Monitor their throughput and latency. Performance issues might stem from the external system, network limitations, insufficient Connect worker resources, or inefficient connector logic. Tune connector-specific configurations (e.g., batch sizes, poll intervals).

- Security Configurations: Connectors interacting with secure Kafka clusters or secure external systems need proper security configurations (SSL, SASL, authentication credentials).

- Dynamic Log Configuration: For deeper troubleshooting, you can dynamically change log levels for specific connector loggers via the Connect REST API without restarting workers. This helps get detailed TRACE or DEBUG logs for a problematic connector (Confluent Docs: Dynamic Log Configuration).

- Connector/Task Failures: If a connector or task status shows

Kafka Streams

Kafka Streams is a client library for building real-time stream processing applications and microservices where the input and/or output data is stored in Kafka topics.

- Monitoring Streams Applications:

- JMX Metrics: Kafka Streams applications expose a rich set of JMX metrics related to processing rates, latency, state store access, RocksDB statistics, etc.

- Application-Level Metrics: Implement custom metrics within your Streams application to track business-specific KPIs or processing stages.

- Log analysis is also crucial for Streams applications.

- State Store Management and Troubleshooting:

- Kafka Streams allows for stateful processing using local state stores (typically RocksDB by default).

- Disk Space: Monitor disk space used by state stores, especially for windowed operations or large KTables. Ensure proper cleanup of old state if applicable (e.g., retention for window stores).

- RocksDB Issues: RocksDB has its own configuration parameters that can be tuned. In some environments (e.g., single CPU core), specific RocksDB settings might be needed to avoid performance issues (Confluent Streams FAQ: RocksDB on single core).

- Closing Iterators: When using state stores, especially persistent ones, it's critical to close any iterators obtained from them after use. Not doing so can lead to resource leaks and OutOfMemoryErrors (OOM) (Confluent Streams FAQ: Closing Iterators).

- Handling Processing Errors and Retries (DLQs):

- Implement robust error handling within your Streams topology. Decide whether to skip bad records, send them to a Dead Letter Queue (DLQ) for later analysis, or halt processing.

- Kafka Streams provides mechanisms like deserialization exception handlers and production exception handlers.

- Understanding Parallelism and Rebalancing:

- The parallelism of a Streams application is determined by the number of input topic partitions and the number of stream tasks created.

- Like Kafka consumers, Streams applications participate in consumer group rebalancing. Rebalances can pause processing, so aim to minimize them by ensuring application stability and appropriate timeout configurations.

Schema Registry (e.g., Confluent Schema Registry)

Schema Registry provides a centralized repository for managing and validating schemas for Kafka messages, promoting data governance and enabling safe schema evolution.

- Importance: Crucial for ensuring data compatibility between producers and consumers, especially in evolving systems. It helps prevent runtime errors due to incompatible data formats.

- Ensuring Availability: Schema Registry itself needs to be highly available as it's a critical dependency for producers and consumers that use it for schema validation or serialization/deserialization. Run it in a clustered mode for production.

- Troubleshooting Schema Registration/Retrieval Issues:

- Check Schema Registry logs for errors.

- Verify network connectivity to Schema Registry from clients.

- Ensure clients have correct Schema Registry URL configured.

- Check schema compatibility settings if evolution issues arise.

- Backup and Recovery of Schemas: Schemas stored in Schema Registry are critical metadata. Ensure you have a strategy for backing up the underlying storage of Schema Registry (often a Kafka topic itself,

_schemas) or use its export/import capabilities.

Key Takeaways: Supporting Kafka Ecosystem Components

- Kafka Connect: Monitor workers/tasks via REST API and logs. Troubleshoot failures by checking stack traces, configurations, and external system connectivity. Use dynamic logging for detailed diagnostics.

- Kafka Streams: Monitor via JMX and application metrics. Manage state store disk usage and close iterators to prevent OOMs. Implement error handling (DLQs) and understand parallelism.

- Schema Registry: Ensure its availability as a critical component. Troubleshoot registration/retrieval issues. Plan for schema backup and recovery.

Kafka on Kubernetes (Optional, but relevant for DevOps/SRE)

Running Kafka on Kubernetes (K8s) is increasingly common, leveraging K8s for orchestration and lifecycle management. However, it introduces unique support challenges and best practices.

Deployment Strategies

- Using Kafka Operators: Operators (e.g., Strimzi, Confluent Operator for Kubernetes) automate the deployment, management, and operational tasks of Kafka clusters on K8s. They handle complex aspects like broker configuration, rolling updates, scaling, and security. This is generally the recommended approach for production.

- Helm Charts: Helm charts can simplify the deployment of Kafka and its components (ZooKeeper/KRaft, Connect, etc.) on K8s by packaging pre-configured K8s resource definitions. However, ongoing operational management might be less automated than with an operator (meshIQ: Kafka on Kubernetes Integration).

Persistent Storage Considerations

Kafka is a stateful application, requiring durable storage for message logs.- StatefulSets: Use StatefulSets for Kafka brokers. They provide stable network identifiers (pod hostnames) and stable persistent storage for each broker pod, which is crucial for Kafka's operation.

- Persistent Volumes (PVs) and Persistent Volume Claims (PVCs): Brokers require persistent storage. PVs (backed by network storage like EBS, GCE Persistent Disks, or on-prem SANs) are claimed by PVCs, which are then mounted into broker pods.

- Storage Class Selection: Choose a StorageClass that provides the necessary performance (IOPS, throughput) and durability for Kafka. High-performance SSD-backed storage is typically required. Consider replication features of the underlying storage if they can complement Kafka's own replication.

Networking in Kubernetes for Kafka

Exposing Kafka brokers and ensuring proper network communication within K8s can be complex.- Service Exposure:

- Internal Access (within K8s): A Headless Service is often used for inter-broker communication and for client discovery within the same K8s cluster. This gives each broker pod a stable DNS entry.

- External Access (outside K8s): Options include LoadBalancer services (one per broker or a single entry point with careful routing), NodePort services (less ideal for production due to port management), or Ingress controllers (often more suitable for HTTP-based Kafka components like REST Proxy or Schema Registry, but can be configured for TCP passthrough for Kafka brokers with some Ingress controllers).

- Advertised Listeners: Correct configuration of

advertised.listenersfor Kafka brokers is critical in K8s. Brokers must advertise addresses that are reachable by clients, whether they are internal or external to the K8s cluster. Operators often handle this complex configuration. - NetworkPolicies: Use K8s NetworkPolicies to enforce network segmentation and control traffic flow to and from Kafka broker pods for security.

Monitoring and Logging in Kubernetes

- Prometheus/Grafana: The Prometheus Operator and kube-prometheus-stack are commonly used to deploy a monitoring stack in K8s. Kafka JMX metrics can be scraped by Prometheus (e.g., using a JMX exporter sidecar or an agent on the pod).

- Log Collection: Use a cluster-level logging solution. Common patterns include running a logging agent (like Fluentd or Fluent Bit) as a DaemonSet on each K8s node to collect container logs, or using a sidecar container within the Kafka broker pod to forward logs to a centralized logging system (ELK, Splunk, Loki).

Common Kubernetes-Specific Troubleshooting

- Pod Restarts / OOMKilled Pods:

- Check pod logs (

kubectl logs <pod_name>) and describe pod output (kubectl describe pod <pod_name>) for reasons. OOMKilled indicates the pod exceeded its memory limit. - Verify resource requests and limits for Kafka pods. Ensure they are appropriate for the workload.

- Check pod logs (

- Persistent Volume Issues:

- Problems with PVCs binding to PVs, or issues with the underlying storage (e.g., disk full on the storage system, network storage latency).

- Check PVC status (

kubectl get pvc) and PV status (kubectl get pv). Examine events for storage-related errors.

- Network Connectivity Problems:

- Issues with service discovery (DNS resolution within K8s).

- Incorrect Service configurations or NetworkPolicies blocking traffic.

- Problems with external access (LoadBalancer, Ingress misconfiguration).

- Use tools like

kubectl execto run network diagnostic commands (ping,nslookup,netcat) from within pods.

- Resource Limits and Requests: Ensure Kafka pods have adequate CPU and memory requests (guaranteed resources) and limits (maximum allowed). Misconfigured limits can lead to throttling or OOMKilled pods.

- Troubleshooting Operator-Specific Issues: If using a Kafka operator, consult its documentation for troubleshooting guides. Check operator logs for errors related to managing the Kafka cluster. (meshIQ: Troubleshooting Kafka Monitoring on K8s often touches on general K8s operational aspects).

Key Takeaways: Kafka on Kubernetes

- Deployment: Use Kafka Operators (Strimzi, Confluent) or Helm charts for lifecycle management.

- Storage: Employ StatefulSets with PVs/PVCs using high-performance StorageClasses.

- Networking: Configure Headless Services for internal discovery and appropriate Services (LoadBalancer, NodePort, Ingress) for external access.

advertised.listenersare critical. Use NetworkPolicies for security. - Monitoring/Logging: Integrate with K8s-native monitoring (Prometheus/Grafana) and logging (Fluentd/Loki).

- Troubleshooting: Focus on pod status (restarts, OOMKilled), PV/PVC issues, K8s networking, resource limits, and operator-specific problems.

Process Aspects for Kafka Support

Beyond the technical intricacies, effective Kafka production support relies heavily on well-defined processes and a strong organizational framework. These aspects ensure consistency, efficiency, and continuous improvement in managing the Kafka environment.

Incident Management Workflow

A structured incident management process is crucial for minimizing downtime and impact.- Detection: Incidents are typically detected through automated monitoring alerts (e.g., consumer lag exceeding threshold, broker down) or user reports.

- Diagnosis: Upon detection, the support team begins diagnosis using a systematic approach. This involves checking monitoring dashboards, logs, and using runbooks for known issues. The goal is to quickly identify the root cause or a contributing factor.

- Escalation: Define clear escalation paths. If first-level support cannot resolve the issue within a certain timeframe or if the impact is severe, the incident should be escalated to more experienced engineers or specialized teams (e.g., network team, storage team).

- Resolution: Apply fixes or workarounds to restore service. This might involve restarting a component, reconfiguring a setting, or failing over to a DR system.

- Communication: Keep stakeholders (users, management, other technical teams) informed about the incident status, impact, and expected resolution time. Clear and timely communication is key.

- Post-Mortem/Root Cause Analysis (RCA): After the incident is resolved, conduct a blameless post-mortem to understand the root cause(s), what went well during the response, what could be improved, and what preventative actions can be taken to avoid recurrence.

Change Management for Kafka Clusters

Changes to a production Kafka cluster, if not managed properly, can introduce instability.- Documenting Changes: All changes (configuration updates, software upgrades, scaling operations, security patches) must be documented, including the reason for the change, the exact steps, and expected impact.

- Review and Approval Process: Implement a review process where proposed changes are assessed by peers or a change advisory board (CAB) for potential risks and benefits. Formal approval should be required before implementation.

- Scheduling Changes: Schedule changes during planned maintenance windows, preferably during off-peak hours, to minimize potential impact on users. Notify stakeholders in advance.

- Rollback Plans: For every change, have a documented rollback plan outlining the steps to revert the change if it causes unexpected issues. Test rollback procedures where feasible.

Runbooks and Documentation

Comprehensive documentation is a cornerstone of efficient support.- Runbooks: Create detailed, step-by-step runbooks (or playbooks) for common troubleshooting scenarios and operational procedures. Examples:

- Broker restart procedure.

- Partition reassignment steps.

- Diagnosing high consumer lag.

- DR failover/failback procedures.

- Adding/removing a broker.

- Cluster Documentation: Maintain up-to-date documentation of the Kafka cluster architecture, including:

- Network topology diagrams.

- Broker configurations (key settings).

- Topic configurations (for critical topics).

- Security setup (authentication, authorization mechanisms).

- Monitoring and alerting setup.

- Contact points for dependent teams and vendors.

Capacity Planning